| Mar 25, 2024 |

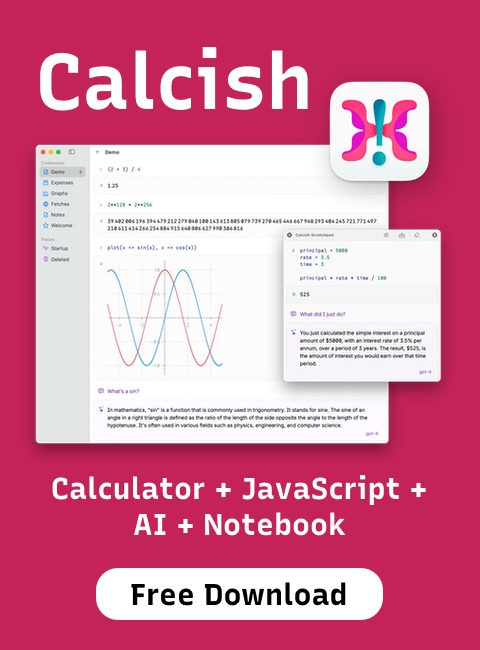

Calcish = Calculator + JavaScript + AI + Notebook |

| Sep 17, 2023 |

A look at Apple’s new Transformer-powered predictive text model |

| Aug 24, 2023 |

Meta releases Code LLama |

| Jul 19, 2023 |

Introducing Llama 2 |

| Jun 04, 2023 |

Falcon 7B/40B model is released under Apache 2.0 license |

| May 02, 2023 |

Mojo |

| Apr 22, 2023 |

Google Bard now supports programming languages |

| Apr 11, 2023 |

Tiktokenizer |

| Mar 17, 2023 |

Alpaca.cpp |

| Mar 12, 2023 |

Port of Facebook's LLaMA model in C/C++ |

| Feb 19, 2023 |

GPT in 60 Lines of NumPy |

| Jan 17, 2023 |

Let's build GPT: from scratch, in code, spelled out |

| Jan 15, 2023 |

Awesome CoreML Models |

| Dec 07, 2022 |

Stable Diffusion with Core ML on Apple Silicon |